第 1 章:實際運行容器環境 Node

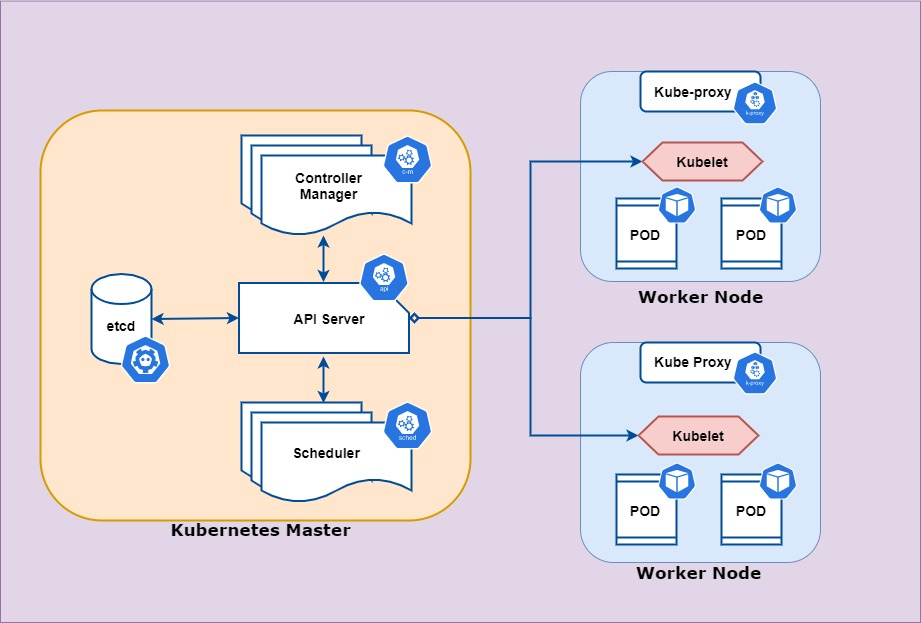

Master Node 架構

透過指令查看 kubernetes cluster 資訊

kubectl cluster-info

Kubernetes control plane is running at [https://10.0.1.70:6443](https://10.0.1.70:6443/)

CoreDNS is running at https://10.0.1.70:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: [https://10.0.1.70:6443](https://10.0.1.70:6443/)

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: DATA+OMITTED

client-key-data: DATA+OMITTED

kubectl config view --raw

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lJV0wyb2JxOXdFdFl3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TkRBeE1Ea3dNekkyTkRGYUZ3MHpOREF4TURZd016TXhOREZhTUJVeApFekFSQmdOVkJBTVRDbXQxWW1WeWJtVjBaWE13Z2dFaU1BMEdDU3FHU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLCkFvSUJBUURQcXRQc0hFTWFGSCt3TWRncWpLMUcycE5oOVVRc2doNTl1S2t2Y0dLaE1hZ3FzbW96TWdkWEJlVkcKa0JyMnhGMXk2WkcrNDB1MEk5YzdSRlkxUmFxTDFBOWs1YVNNYVJPZi9EZ052QURJNkpFUGY0Q3ZSRDc5RVRzZQpsdVhLMVJhZTVHVWZ4NS9LTWRHZ2xsOU5LekUyKy84QXlCTjNBaGJQNnJ0YlpRQVF6N2VwaWNtY3ErYmhYTjFHCnNFYmthcVJnYVFjNVV3SFpiYzk2V0VHWENGS05IeE1UUTVLNDdxR0p5ZWF1ZVU3aEZKeXduSE8yNlhWUjBVcmoKNlpjdGI3Qk44eDRXMGlZRzNwWi9hM2gwUUE2a3dPWjM5dHF4alM4enR4dHM4RGRrVlh0VTB1OUpUMEw1YlFZVApDS3B0WlY4VE80c2NWdWtKWEdwZmdReUdncFl0QWdNQkFBR2pXVEJYTUE0R0ExVWREd0VCL3dRRUF3SUNwREFQCkJnTlZIUk1CQWY4RUJUQURBUUgvTUIwR0ExVWREZ1FXQkJReHBmMGlnU0lNWjRTOGEwc0NCSzVRamV6M3NqQVYKQmdOVkhSRUVEakFNZ2dwcmRXSmxjbTVsZEdWek1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQml3TWYyUXlUMApTS2pYNUtwc21oY215bGtBNmRwU2Qxd214UFo0ZTR1TDZGb3ZFUVFxUkVpSmJldlF1b2xLY1dPQmVWWXVhN2huCkZaaVV1Zk9HcDVUYmV0UDYyR3pQWUhuWnNsNUVjVlRkY3pmdklYVVlnOERqbm43bFErbElPcU1rQnZxMzB1Yi8KVFQxQkFLZEZ4VjYvY1hLNzVwclBZQ1I4M2ZXbndEQnkxVXdpK1dRdzgyUTBtV1hpY0s0dFpJMi9lcFpkeTIvcApFRE1HTEdBWnlZQVBRUnhoTkphNGxIMmNaZmtLK2pwQ3dmcFdxYzVVcjdpVW9ERnVQOTBpZ2dnajUveGlpaXlUCnE1YmpZcEZicitCTTU3bHY4VHVsZEdDWVNjT0ladzVUa1Z3SWpSYzhHUWp6Z1ZZMDlSTmp5YW0reThBYjFaYkoKVUZOUHc2YWtzRzd5Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

server: [https://10.0.1.70:6443](https://10.0.1.70:6443/)

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURJVENDQWdtZ0F3SUJBZ0lJQTQ5a3ZMRzBQUkV3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TkRBeE1Ea3dNekkyTkRGYUZ3MHlOVEF4TURnd016TXhORFJhTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQTd5SGFqSmx4dXNYMHFlbCsKVXhKaURPVlI1STZqUGlDeCtUanpiS0ZLUTI1bWhhRHR5N0drM1BxVXlvVmRqeHJueUlEZXhPeHRHcHdMNUU2Nwp6MUovU3dKV0J2aWFndVp0NDg2cmJBMUVvTkhxR3oydVlPd1VwcEdRNG8yN2JONEh6Mmx4NHQwcndxZUVjd05nCk1VZmdDMFlQWUNKQXNzc3BTWEg5SnhXbEl0V2lnMi9SWm5JR0ZtZ1pCSXlQYlIwK1B2eFhYMDRTK2ltcFpWUXIKbHc0aGtub3FSVWp3ZEtUYjhMWkJiVm5jaytWdDZidEFzaGlqb2NGWUQzZ3JmVXJDaDJsZzJaSGhGYzhqQURZYQpBVW5WQmY4UHVqcXd3OHo5UWR5K2M0RXVJWEFlc0tSRkZONEI1OTJPQkM1OThPYjIyWGJhcXBsemFreE5ETm00CmY5Q1FPUUlEQVFBQm8xWXdWREFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RBWURWUjBUQVFIL0JBSXdBREFmQmdOVkhTTUVHREFXZ0JReHBmMGlnU0lNWjRTOGEwc0NCSzVRamV6MwpzakFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBbzdXSHhYdDVYTUFHRnVQOW1qN2FtZ0F4cmN2RWtRRXY4bFByCmtYSnJoUFlGeWI4aDJROGtnZW1ORldScFEwZG9yUU1VMlI3V2RzMmU4OVhCVVBlcVNjblprODhBSEl6OG1nWnkKVXBFY0lhWHROcVpCcG1Sbjc1U0REcjRHNWg2ZXQ3cHFmYTg2SHVnakZkbHZCbEpGTzVVdWxMdUVPNHQzb2Q5ZgpIOW1LT3RycGtIMVp6M2JvaUY5TFRCK0lpdGN3bkFRbHA5Uk1zNHMxd1JxTDF2RE1aaTBPRXNBSW1rZnpTeHNKCkxGL21RTUh4eWhGVWlaRk5nUDIzVGpHLzlheXdxT3YvY3VDRW96U21raDk2N2MySWFkLzhEejJMMy8vWTlCMTMKK0MzUTJEVUpXT0QxWGZwNVpWN0t0SDJBbzMyVDBlNlNFQU1uTG04cDhWVG9vY0hDclE9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBN3lIYWpKbHh1c1gwcWVsK1V4SmlET1ZSNUk2alBpQ3grVGp6YktGS1EyNW1oYUR0Cnk3R2szUHFVeW9WZGp4cm55SURleE94dEdwd0w1RTY3ejFKL1N3SldCdmlhZ3VadDQ4NnJiQTFFb05IcUd6MnUKWU93VXBwR1E0bzI3Yk40SHoybHg0dDByd3FlRWN3TmdNVWZnQzBZUFlDSkFzc3NwU1hIOUp4V2xJdFdpZzIvUgpabklHRm1nWkJJeVBiUjArUHZ4WFgwNFMraW1wWlZRcmx3NGhrbm9xUlVqd2RLVGI4TFpCYlZuY2srVnQ2YnRBCnNoaWpvY0ZZRDNncmZVckNoMmxnMlpIaEZjOGpBRFlhQVVuVkJmOFB1anF3dzh6OVFkeStjNEV1SVhBZXNLUkYKRk40QjU5Mk9CQzU5OE9iMjJYYmFxcGx6YWt4TkRObTRmOUNRT1FJREFRQUJBb0lCQVFDaEVyeVRXQWd6Tm9QRwpyM0JYcEZwSE5YQWo4N0FoZVFSb1N1UXNsN2ZlRTg4YTAzdFphUjhUZWZZZk5CWUEvcTZCYjh5ZCtHTEZPYmNJCjRiKy90bUhudEhzZDF2YThsVm9QeUhYUEltREhzcDF1Rys0SmV2dXQ5djhRbTBkVDNYcUZTeEZiTG43N2JyclkKZmIyZGREeUZXM3VmMVhLZS9CbWpUeFNsN3Y1OHB2eUpQeFBWbUhxRFJrRGxHVDVGY1EyNXp1emNrWjFScXlQbAp4YTI1MHQvVHZzYy9GSENLa2NQdmVsZHp1OHRWS1VDWS84c0FVOXc0eldJWndFU3YzODlNVW4vVUh6bjhhbVlmClFIV0ZtQVdSZFRCN0llUk1JSDN5Qk5jN3VOeEp3U1NGL3pZWnRsS3NvTDg1TkNmczNXOStGOXFyYnVWRnl5SUEKRVR2ZzRvUEJBb0dCQVBBMUhZNmVXQitJb1VCeUNjeWRKOWpYVWpGVCt1aHgxaW5lR1JPQkwzYmVwUkFDK0hmbwpKMkN1d3I2L05oaUd1SjJJTVorT1loVzJyV28rZmNNY2lVdHBNMm9Xdkpac2VFMUtpVDN2ZUFDaVRuTzJXZzRuClB1NGRDVnA3RlpUaXZ3Z2F0ZTdKYjRieUN5dWRDandrY2R0K3hLR1JybjNlM1liMkozQ3JhZ2w3QW9HQkFQN2EKcEIyN2dPVFF3OEtFRWVtREluanJpT1JnZ3l3dkJBbzlhTkZpYW5EelRwY1IvMGpFNURTanFvb2RSNjRPSzB2RwpaU1FQeFR3WmFtK3RWWWlxeGh3Ris1K0FybXk5V3hhYTBsRnBEY3hhMEEyVnVhcjVVSHVZK3EyN3BPVUpjbC9BCjNxMjl4Q1RXS1ZtZmlXTjVlWDB0anhsQWswVm9RNVVmOU5VaDR4emJBb0dCQU5ENEJHeXNTRkcybFVIeGM2RDAKSDFRL2podW55YUlxVm53NTRXcmNlaVJaY1JQSzRjbSt4M01PMWhFSFc5SmVjWUZxbUhFTWR6d1luaWpOa0s4Swp4azQ2aVNZRC9iQ0hVT2s4ck1TYTdiVHZmbnlmNlJ4aS9CWStZSUs4alh2bS92WFg5dXB6aDNqSVhQdG1OUjg1ClBXd01qcGRZbTRxWk5mTlJkZUpJM1lpOUFvR0FIRDlmc1d2Mi9XV21UUWtXY3l0MXhyRlVtdnVYam00bnBIcnEKQTB0RlFQKzIxa2ZJWStodTR1YkJJRTNlaEF4T0FEZ2RQY0lCdW9lV3BJb3gwTlFjNFQ1SWhZVmR6Kyt0Q1BSZwpHMlhwZ1owUE1uSkdBZzFnUGxMV2RpczJPNnl3WUt5Z0FlSG11NjZCSnMzb0RINktXTFdVQnRpQmVrLzBMYk05CkQrS1JxY0VDZ1lCSjFJT0djYTQrNDJzNGluQ1B2UnRrTWpQdlc5VnVNUEpONkw4cEtPY28vM2cyck95dk9oTjQKWDhUR0Y5elZCYzRnYkNSVHFzeWNNL3V2ZHVDMXZZVFpCVVZ1UkxKTnNlZ1VUKzkyb1FUdVRHOEVOOFREZjY1Rwo3YzdHQ2JuWExVQTRKaFl4VmxZWHc1dmppeVpCZnRtRVMyeUs4V01jUmV5MThKZ2o0cklhY1E9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

- `kubernetes-admin@kubernetes kubernetes kubernetes-admin`

kubectl api-resources | more

root@k8s-msr-1:/home/ubuntu# kubectl api-resources | more

NAME SHORTNAMES APIVERSION NAMESPACED KIND

bindings v1 true Binding

componentstatuses cs v1 false ComponentStatus

configmaps cm v1 true ConfigMap

endpoints ep v1 true Endpoints

events ev v1 true Event

limitranges limits v1 true LimitRange

namespaces ns v1 false Namespace

nodes no v1 false Node

persistentvolumeclaims pvc v1 true PersistentVolumeClaim

persistentvolumes pv v1 false PersistentVolume

pods po v1 true Pod

podtemplates v1 true PodTemplate

replicationcontrollers rc v1 true ReplicationController

resourcequotas quota v1 true ResourceQuota

secrets v1 true Secret

serviceaccounts sa v1 true ServiceAccount

services svc v1 true Service

mutatingwebhookconfigurations admissionregistration.k8s.io/v1 false MutatingWebhookConfiguration

validatingwebhookconfigurations admissionregistration.k8s.io/v1 false ValidatingWebhookConfiguration

customresourcedefinitions crd,crds apiextensions.k8s.io/v1 false CustomResourceDefinition

apiservices apiregistration.k8s.io/v1 false APIService

controllerrevisions apps/v1 true ControllerRevision

daemonsets ds apps/v1 true DaemonSet

deployments deploy apps/v1 true Deployment

replicasets rs apps/v1 true ReplicaSet

statefulsets sts apps/v1 true StatefulSet

selfsubjectreviews authentication.k8s.io/v1 false SelfSubjectReview

tokenreviews authentication.k8s.io/v1 false TokenReview

localsubjectaccessreviews authorization.k8s.io/v1 true LocalSubjectAccessReview

selfsubjectaccessreviews authorization.k8s.io/v1 false SelfSubjectAccessReview

selfsubjectrulesreviews authorization.k8s.io/v1 false SelfSubjectRulesReview

subjectaccessreviews authorization.k8s.io/v1 false SubjectAccessReview

horizontalpodautoscalers hpa autoscaling/v2 true HorizontalPodAutoscaler

cronjobs cj batch/v1 true CronJob

jobs batch/v1 true Job

certificatesigningrequests csr certificates.k8s.io/v1 false CertificateSigningRequest

leases coordination.k8s.io/v1 true Lease

endpointslices discovery.k8s.io/v1 true EndpointSlice

events ev events.k8s.io/v1 true Event

flowschemas flowcontrol.apiserver.k8s.io/v1beta3 false FlowSchema

prioritylevelconfigurations flowcontrol.apiserver.k8s.io/v1beta3 false PriorityLevelConfiguration

ingressclasses networking.k8s.io/v1 false IngressClass

ingresses ing networking.k8s.io/v1 true Ingress

networkpolicies netpol networking.k8s.io/v1 true NetworkPolicy

runtimeclasses node.k8s.io/v1 false RuntimeClass

poddisruptionbudgets pdb policy/v1 true PodDisruptionBudget

clusterrolebindings rbac.authorization.k8s.io/v1 false ClusterRoleBinding

clusterroles rbac.authorization.k8s.io/v1 false ClusterRole

rolebindings rbac.authorization.k8s.io/v1 true RoleBinding

roles rbac.authorization.k8s.io/v1 true Role

priorityclasses pc scheduling.k8s.io/v1 false PriorityClass

csidrivers storage.k8s.io/v1 false CSIDriver

csinodes storage.k8s.io/v1 false CSINode

csistoragecapacities storage.k8s.io/v1 true CSIStorageCapacity

storageclasses sc storage.k8s.io/v1 false StorageClass

volumeattachments storage.k8s.io/v1 false VolumeAttachment

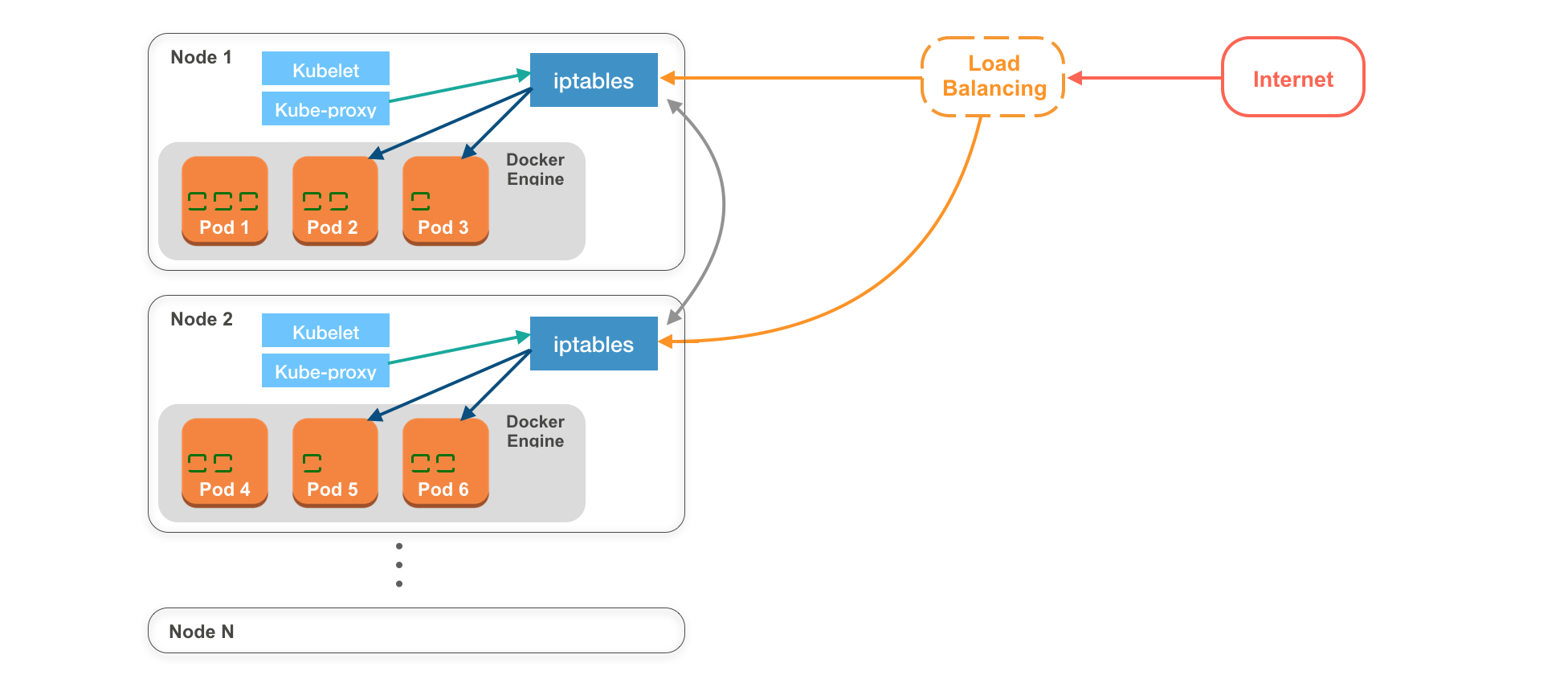

Worker Node 架構

Pod

K8S 的最小單位,裡面可以包含 1 到多個容器 Container

Container Engine

Node 中決定容器的執行環境,大部分情況下預設都是 Docker Enginer

iptables

每個 Node 都有屬於它自己的 iptables, 是 Linux 上的防火牆 (firewall),不只限制��哪些連線可以連進來,也會管理網路連線,決定收到的 request 要交給哪個 Pod

kubelet

- kubelet 相當於 node agent,用於管理該 Node 的所有 pods 和與 master node 即時溝通

kube-proxy

- kube-proxy 則是會將目前該 Node 上所有 Pods 的資訊傳給 iptables,讓 iptables 即時獲得在該 Node 上所有 Pod 的最新狀態。好比當一個 Pod 物件被建立時,kube-proxy 會通知 iptables,以確保該 Pod 可以被 Kubernetes Cluster 中的其他物件存取。

Load balancing

- 在實際場景中,收到外部傳來的 requests 都會先交由 Load balancer 處理,再由 Load balancer 決定要將 request 給哪個 Node ,通常 Load balancer 都是由 Node 供應商提供,好比 AWS 的 ELB,或是自己架設 Nginx 或 HAProxy 等專門做負載平衡的服務

Node 實際運作流程

創建一個新的 Pod 後,並讓其收到使用者發送請求

- 創建 Node

- master node → Kubelet 創建一個 Pod

- Kube-proxy → iptable 建立對 Pod 的路由並申明 Pod 為可用 ( available )

- 使用者發送請求

- 負載均衡器 Load Balancer → Worker node → iptables → Pod

- 如果 Worker Node 中沒有可運行的 Pod

- Worker Node → iptables → 其他 Worker Node

查看 Node 狀態

Pod 需要運行在 Node 上,因此我們會需要先了解 Node 狀態

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-msr-1 Ready control-plane 20m v1.28.0

k8s-wrk-1 Ready <none> 19m v1.28.0

k8s-wrk-2 Ready <none> 19m v1.28.0